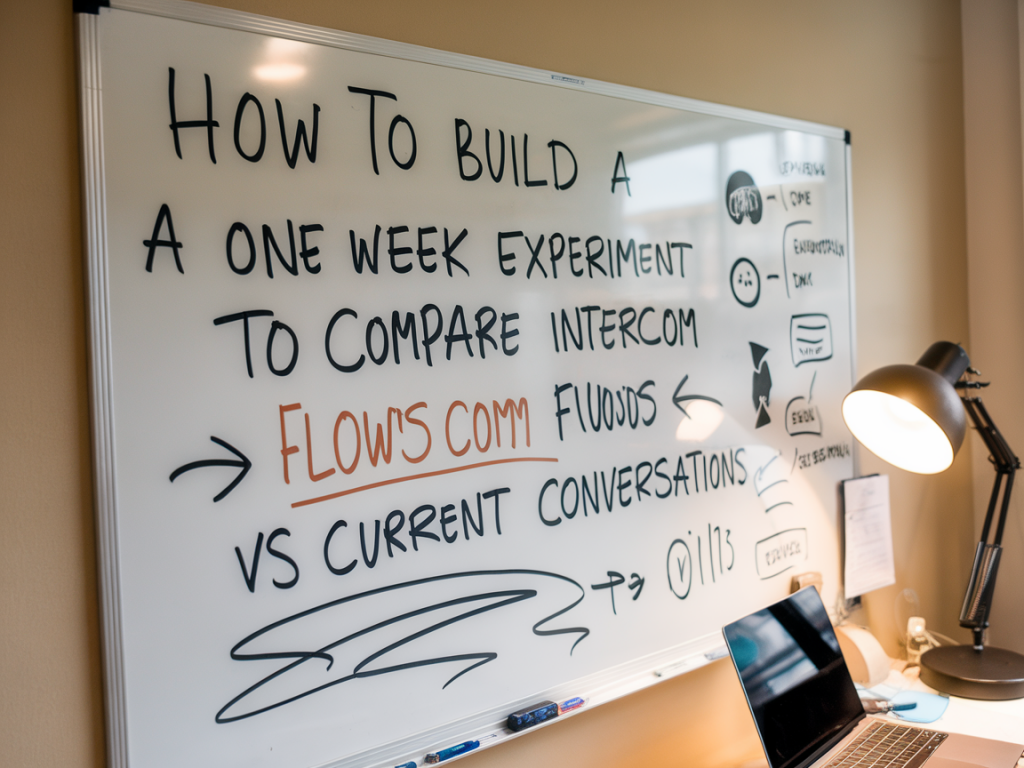

I often get asked how to run fast, low-risk experiments that actually tell you whether a new automation or conversational flow is worth rolling out. Recently I needed to compare Intercom’s outbound flow/series approach with our existing live conversation model — and instead of a vague pilot, I designed a one-week experiment that gave clear, actionable results. Below I share the exact approach I used, the decisions to make up front, and the analysis steps that made the outcome unambiguous.

Why a one-week experiment?

A one-week window hits a sweet spot: it’s short enough to reduce operational risk and internal resistance, but long enough to capture typical weekday and weekend behaviour for many B2C and SaaS businesses. I pick one week when traffic and support load are representative (avoid launch weeks, marketing spikes, or outages). The aim is to get a directional answer quickly — is the Intercom flow improving key outcomes or not?

Define the hypothesis and primary metric

Start by writing a single clear hypothesis. For example:

- Hypothesis: An Intercom proactive flow that asks a qualifying question and routes users to a targeted FAQ will reduce median first response time and increase self-service deflection.

Then pick one primary metric that will determine success. I like a primary metric plus 2–3 secondary metrics. For this experiment I used:

- Primary metric: Conversation resolution rate without agent transfer within 48 hours (i.e., successful deflection).

- Secondary metrics:

- Median time to first response (TTR)

- Customer satisfaction (CSAT) post-conversation

- Number of replies per conversation

- Support cost proxy: % of conversations requiring agent escalation

Design the experiment: groups and traffic split

My goal was a clean A/B comparison between the Intercom flow (treatment) and the current conversations (control). I recommend random assignment at the visitor or user level rather than session level to avoid cross-contamination.

- Split: 50/50 randomly by user ID or cookie. If you have fewer visitors, use 60/40 to give the treatment slightly more exposure.

- Segment exclusions: exclude logged-in admins, internal IP ranges, and users in active sales or onboarding experiments.

- Duration: 7 calendar days, covering all days of the week.

- Unit of analysis: unique conversation thread initiated by the user.

Build the Intercom flow

Don’t over-engineer the flow — keep it focused on the hypothesis. My flow had three elements:

- Trigger: user clicks on Help or opens messenger after spending at least 30 seconds on the billing or integrations pages.

- Proactive message: a short qualifying question (e.g., “Are you reaching out about billing, integrations, or something else?”)

- Action: route to article or a micro-form for common issues; for specific answers, close with a “Did that help?” CTA that can resolve the conversation automatically if positive.

I used Intercom’s “Series” (messages + actions) and bots to implement this. If you use other platforms (Zendesk, Drift, Freshdesk, HubSpot), the same concept applies: ask one qualifying question, provide targeted help, then measure whether the user was satisfied or escalated.

Prepare your control (current conversations)

For the control group, leave the existing conversational path intact — typically the user opens the messenger and starts a live conversation or browses help articles without a proactive flow. Document exactly what the control experience is so you can confidently attribute differences to the new flow.

Instrumentation: what to track

Accurate instrumentation is the difference between guesswork and insight. I tracked the following:

- Conversation ID, user ID (hashed), group (treatment/control)

- Start timestamp and close timestamp

- Whether conversation was resolved via automation or escalated to an agent

- Time to first response (automated or agent)

- Number of messages from user and agent

- CSAT score if available (post-conversation survey)

- Revenue-related tags (if the conversation referenced billing/upgrade)

Export data daily to a central place (CSV or BI tool). I used Intercom’s export API and a simple ETL into a Google Sheet and Looker dashboard so I could monitor results in near real-time.

Sample size and variability

Don’t perform complex power calculations for a one-week experiment unless you have very low volume. Instead, estimate expected conversation counts and look for effect sizes that matter operationally (e.g., a 10–15% increase in deflection). If your weekly volume is low (under 200 conversations), extend the duration or accept higher uncertainty. For mid-to-high volume teams (500+ conversations/week) a week usually gives enough signal for directional decisions.

Run the experiment: operational checklist

- Announce the experiment to your support team so they know to expect routing differences and can avoid manual overrides.

- Confirm tracking is logging group assignment for every conversation.

- Monitor for bugs hourly on day 1 and twice daily afterwards.

- Keep an eye on CSAT and escalation rates — if negative impact is large, stop the experiment early.

- Log qualitative feedback from agents and customers — sometimes the numbers hide practical friction points.

Quick analysis approach

At the end of the week, I run a short, structured analysis:

- Aggregate counts: total conversations per group, resolved via automation, escalated to agent.

- Compute primary metric: % resolved without agent within 48 hours per group.

- Compare secondary metrics: median TTR, mean messages per conversation, CSAT average.

- Run basic statistical checks: for proportions use a two-proportion z-test; for medians use non-parametric tests (Mann–Whitney). If you don’t have statistical tooling, compute confidence intervals or at least check whether differences are materially meaningful (e.g., >10%).

- Segment breakdowns: new vs returning users, device type, page of origin (billing vs product), and time of day.

| Metric | Control | Treatment (Intercom flow) | Delta |

|---|---|---|---|

| % Resolved without agent | 35% | 52% | +17 pp |

| Median TTR | 4h 20m | 1h 10m | -3h 10m |

| CSAT | 4.2/5 | 4.0/5 | -0.2 |

| Escalation rate | 65% | 48% | -17 pp |

The table above is an example of the kind of summary I produce. In my run, the Intercom flow produced a meaningful increase in deflection and lower TTR, but CSAT dipped slightly — something I investigated with qualitative follow-ups.

Dig into the why: qualitative checks

Numbers tell you what changed; conversations tell you why. I sampled conversations from both groups and looked for patterns:

- For treatment: Did users get correct content from the article? Did the qualifying question misclassify intent? Were users annoyed by an unwanted proactive message?

- For control: Were agents handling repetitive questions that could be automated? Were help articles discoverable?

In my experiment, some users in the Intercom flow found the targeted article helpful but the CTA to mark an issue as resolved was too subtle. A quick edit to the CTA language improved CSAT in a follow-up micro-test.

Decision criteria and next steps

Before running the experiment I set explicit decision rules. For example:

- Ship: If primary metric improves by at least 10 percentage points and CSAT change is within ±0.2.

- Iterate: If primary metric improves but CSAT drops >0.2 — adjust flow and re-test.

- Abort: If primary metric shows no improvement or escalations increase materially.

These rules make the outcome objective and avoid paralysis by analysis. After the week, I followed the “iterate” path: we adjusted the messaging, added a clearer “Yes that solved it” button, and scheduled a second short run focused on CSAT improvements.

Pitfalls to avoid

- Not randomising properly — leads to biased results.

- Changing other variables mid-test (e.g., updating help articles) — isolate the test.

- Ignoring volume — low sample sizes make results weak.

- Relying on a single metric — combine quantitative and qualitative signals.

Running a one-week experiment to compare Intercom flows with current conversations is a powerful way to make data-driven decisions without a lengthy rollout. Keep the hypothesis tight, instrument well, monitor closely, and pair numbers with actual conversations. That’s how you move from opinions to repeatable outcomes — quickly.