I run a lot of experiments with chatbots and conversational automation, and one question keeps coming up: how do we prove the bot is actually improving customer satisfaction? It’s tempting to point to a single CSAT score and call it a day, but in practice you need a mix of quantitative and qualitative signals to build a convincing story. Below I share the set of metrics I always track, why each matters, how to measure them cleanly, and a few practical tips for avoiding common attribution traps.

Why a single CSAT number rarely tells the whole story

CSAT (customer satisfaction) is useful, but it’s noisy and easy to misinterpret when you add automation. A bot can make a conversation faster but less helpful; CSAT might stay flat or even decline while other outcomes improve. Conversely, a bot that deflects tough issues could artificially boost CSAT by keeping low-effort queries away from agents—yet customers with complex problems may be worse off overall.

So instead of asking “did CSAT change?”, ask “which parts of the experience changed, and did those changes align with customer needs and business goals?”

Core metrics I track to prove a chatbot improves satisfaction

- CSAT (post-interaction): Still essential. Ask after both bot-only and bot+agent interactions, and segment by intent complexity.

- Task Completion / Success Rate: Measures whether the user achieved their objective (e.g., password reset completed, refund initiated). This is often the most direct proxy for satisfaction.

- Containment / Deflection Rate: Percentage of inquiries resolved by the bot without human transfer. High containment is good if completion and satisfaction remain acceptable.

- Escalation / Handoff Rate: How often the bot transfers to a human, and why. A low handoff rate isn’t always positive if users are being bounced around instead of helped.

- Time to Resolution or Time to First Value: Speed matters. Measure median time from initiation to resolution for bot vs human paths.

- Customer Effort Score (CES): Users often prefer low-effort solutions; CES complements CSAT by focusing on perceived effort.

- Sentiment & Qualitative Feedback: Use NLP sentiment analysis on transcripts and collect open-ended feedback—this surfaces friction the numbers miss.

- Repeat Contacts / Reopen Rate: Are customers contacting you again for the same issue after interacting with the bot? High reopens signal unresolved problems.

- Conversion & Business KPIs: For commerce use cases, measure cart completion, refunds, cancellations, or subscription retention tied to bot interactions.

- Cost per Contact / Cost Savings: Operational metric showing whether the bot reduces agent workload and cost while preserving experience.

How to measure these metrics reliably

Measurement is as much about methodology as it is about picking the right metrics. Here are the approaches I use to reduce bias and make results defensible.

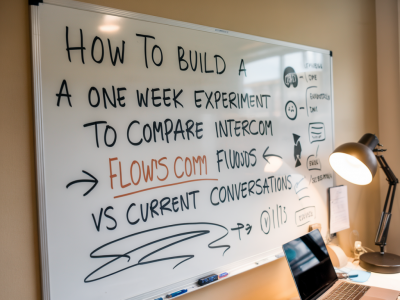

- A/B or controlled rollouts: Run an experiment where a random subset of users sees the bot, while another group follows the legacy path (or sees a simpler bot). This isolates the bot’s effect from seasonal or product changes.

- Segment by intent complexity: Split metrics into “simple” and “complex” intents. Bots should excel at simple tasks; for complex flows, prioritize smooth handoffs.

- Instrument task success: Track concrete signals of resolution—API calls, status changes in your CRM, or page events. Don’t rely solely on self-reported completion.

- Attribute using session and cross-channel IDs: Link bot sessions to the same customer across channels so you can see downstream effects (e.g., did a bot interaction reduce calls later that week?).

- Use an attribution window: Some impacts appear later (reduced churn, fewer returns). Define a reasonable window—7, 30 or 90 days—based on the customer journey.

Practical dashboard — what to show stakeholders

I like a compact dashboard that answers three stakeholder questions: Is the bot solving problems? Is it improving customer experience? Is it delivering value?

| Category | Key metric | Why it matters |

|---|---|---|

| Effectiveness | Task Completion Rate | Direct measure of whether users get what they need from the bot. |

| Experience | CSAT / CES / Sentiment | Shows perceived quality and effort; detects friction that completion metrics miss. |

| Operational | Containment Rate & Cost/Contact | Quantifies load reduction on agents and potential savings. |

| Risk | Reopen Rate & Escalation Reasons | Identifies unresolved issues and poor handoffs that harm satisfaction. |

Qualitative signals you shouldn’t ignore

Numbers tell a lot but not everything. Read conversation transcripts and sample open-text CSAT responses weekly. I often find recurring UX issues—confusing microcopy, unclear fallback messages, or overly rigid dialog trees—that don’t show up in aggregated metrics until they create churn.

Also run periodic usability sessions where real customers interact with the bot while you observe. Watching a customer stumble over phrasing or abandon a flow is far more persuasive to product and design teams than a chart.

Common attribution pitfalls and how to avoid them

- Survivorship bias: Only looking at completed sessions inflates success rates. Include abandoned or errored sessions in your analysis.

- Selection bias: If power users or certain segments disproportionately use the bot, results won’t generalize. Segment your analysis.

- Feedback bias: Post-interaction surveys tend to have higher response rates from extremes (very happy or very angry). Weight or normalize scores if response demographics differ between bot and human channels.

- False containment: A bot marking an issue as “resolved” doesn’t mean the customer is satisfied. Cross-validate with reopen rates and follow-up behavior.

Examples from the field

At a mid-sized SaaS client, we saw CSAT drop 3 points immediately after launching a bot. Panic ensued. But when we layered other metrics we discovered: completion rate for common tasks improved by 25%, containment rose, and repeat contacts fell—yet sentiment on complex flows was poor. The solution was targeted refinements: improved fallback copy, better intent routing, and a clearer handoff path. After those fixes, CSAT rebounded and the positive operational metrics persisted.

Another example: a retailer used a bot to drive returns and refunds. Conversion to refunds increased (good), but repeat contacts for the same order also increased. Investigating transcripts revealed the bot was missing a required order verification step, causing downstream friction. Fixing that single validation reduced reopens and improved CSAT.

Quick checklist before you report results

- Have you compared bot vs non-bot cohorts with similar intent mix?

- Is task completion instrumented by objective signals (API/CRM) and not just user report?

- Did you include abandoned sessions and follow-ups in analysis?

- Are qualitative themes from transcripts aligned with quantitative signals?

- Have you defined an attribution window for downstream metrics like retention or churn?

If you want, I can draft a measurement plan tailored to your use cases (support, ecommerce, or product help) and suggest a dashboard layout you can plug into Looker, Tableau, or a simpler Google Sheets / Data Studio setup. I often recommend starting with the compact dashboard above and expanding as you validate signals—measure what matters, instrument rigorously, and iterate based on evidence.